Calamity Joe, the smart git, chose to do all of his Cinematic work directly in UE4.

I mean, ok, he is a young intern fellow, full of optimism and willingness to learn. So the opposite of myself. I have over 8 years experience in the animation industry. I am used to animating every detail in Maya (or 3Ds Max) and rendering it out from there. I am used to the standard 30fps, some room for editing and tweaking things in post.

Great stuff.

Do I know games engines? No.

Or at least I didn’t.

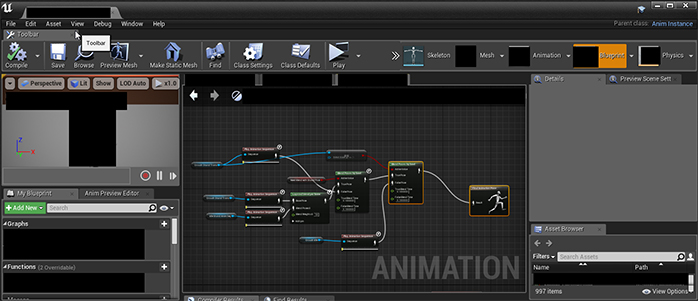

Not until about 6 months ago when I was rudely dropped into Animation Blueprints and a whole world of ungodly foulness that felt like playing with lego, blindly in the dark expecting something magic to happen when you turned the lights on. And now something completely different: Sequencer.

Now I have to admit, after a little while of pretending to be a Technical Animator (that is to say someone whose job it is implementing individual animations into a game), I managed to learn quite a bit, though I still wasn’t sold on it as a career direction.

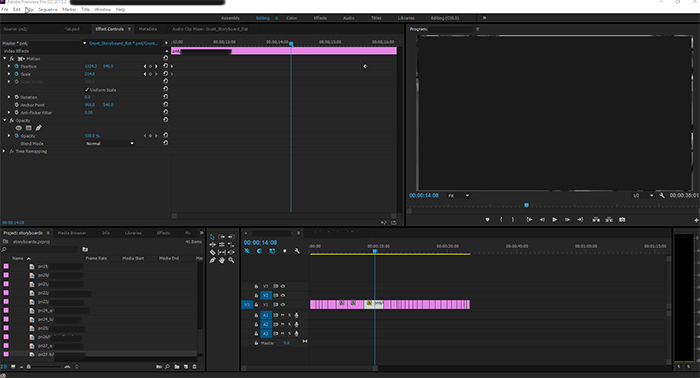

Unreal Editor’s Sequencer

In UE4, the Sequencer Editor gives the user a way to create and edit in-game cinematics. You are given a simple timeline within which you can import “Shots” – other timelines which hold any information specific to that shot. In these “Shots” you would have any actors you need (and in this case actors means anything doing something – ie, characters, cameras, particle effects, ect), and manipulate them as you wish. Each Actor is represented as a new layer in the shot’s timeline, and you can add any property belonging to that actor as an animatable “Track”. By means of an example, say your actor was a light that you wanted to move into a certain position and blink. You would add a ‘Transform’ track, which holds all of the translation and rotation information, and a ‘Light Intensity’ track to control how bright the light is.

With these individual tracks, you can now scrub your way through the timeline, stopping where you want something to happen, add a keyframe with a click of a button, and boom, you’ve got your simple animation, you lucky devil, you!

Now this is all pretty basic from an animation point of view. Sure, it is really impressive to chop and edit shots with other shots without having to shift a bajillion keyframes from one place to another, and yeah, the addition of its own curve editor makes it really easy to manipulate the flow of each animation track, but that’s just like playing with After Effects, only fully in 3D.

When it comes to character animation, though, it is definitely worth doing all of that in Maya or Max. Do you’re little walk cycle, or little fight sequence or whatever, and export each character’s animation as you would for normal games animation. You can then add this whole animation as a block into one of the shots. In fact you can add two animations as blocks into a shot! Screw it! have as many animations as you want, coz you can blend between them! Want your walk to turn into a fight scene? Simple, blend it! Don’t like where your actor is walking? Fine, animate it’s transform track and deviate his movement. The more I think about this, the more I am impressed by it and consider it a great tool for getting together some quick and easy animations.

Issues

Unfortunately it’s not as simple as that, not for me anyway. Being well established in my ways, there were things I wanted to do that I couldn’t. Or I could, but the entire thing was hacky or clunky to use. Simple things like making the viewport show you EXACTLY what you’re render will see, setting up and locking a frame dimension that won’t scale weirdly as the viewport changes. Getting the viewport to show me the Field of View on a per-shot basis was my biggest frustration. I had a lot of shots that worked well in Maya, with the camera position and field of view set up. However easy translating that info into UE4 was, scrubbing through just seemed to stick with one FOV. It was only when I rendered the sequence that I realised this wasn’t the case.

I had it on occasion when certain actors not placed into certain shots would find their way in there anyway, standing T-posed in the middle of the action. Once again, this would not come up in the viewport, but render only. To get ride of these, I had to turn the actors invisible.

Lastly, due to the nature of games engines which run in terms of seconds rather than frames, there is a slight motion blur flash when actors step from one position to the next. This is most prominent on the cuts, where the master camera is moving from one position to the next. Unfortunately I don’t have the time to troubleshoot this issue, and so will have to throw the rendered video into Premier to edit them out.

Post Effects

I have to admit, however, one thing that I have really enjoyed is the ability to create post effects live on a 3D scene. Typicaly if you wanted to render out depth of field or change the scene’s lighting, you would have to re-render entire shots, or render out a separate pass (a layer that would be used in compositing/post production phase). With the in-built camera effects, Post Process Volume assets, Atmospheric Fog effects among other things, you can do pretty much anything you could in After Effects directly in engine, in REAL TIME!

Shocked Face.

Colour correction, camera shakes, even adding particle effects like explosions or gunshots are as easy as dragging and dropping the required effect into shot and attaching them to the required bone or socket.

Now I’ve got to say, I have only experimented with this, using the basic presets and not delving too deep in how UE4 can be used as an effective and time saving tool for Previs, Cinematics and animation production. I havent even looked into integrating sound effects! But when I look beyond the flaws I have found and learn to use it as it is intended (rather than trying to shoe-horn a Maya scene and workflow into an engine), I think this has a lot of potential, and look forward to delving a little deeper into what UE4 has to offer visual storyteller.

Anyway, that’s it for this little four week experiment. Hopefully next I’ll get round to doing a bit more fiction. We will see.

Have a WONDROUS weekend, Y’all!

Oz